Small Batch Size Neural Network

Revisiting small batch training for deep neural networks. Every time you pass a batch of data through the neural network you completed one iteration.

How Ai Training Scales

Suppose that the training data has 32000 instances and that the size of a minibatch ie batch size is set to 32.

Small batch size neural network. Graphcore 0 share. Batch size is typically chosen between 1 and a few hundreds eg. A third reason is that the batch size is often set at something small such as 32 examples and is not tuned by the practitioner.

Small batch sizes such as 32 do work well generally. Often mini batch sizes as small as 2 or 4 deliver optimal results. In the case of neural networks that means the forward pass and backward pass.

Modern deep neural network training is typically based on mini batch stochastic gradient optimization. The notion of minibatch is likely to appear in the context of training neural networks using gradient descent. 04202018 by dominic masters et al.

Our results show that a new type of processor which is able to efficiently work on small mini batch sizes will yield better neural network models and faster. While the use of large mini batches increases the available computational parallelism small batch training has been shown to provide improved generalization performance and allows a significantly smaller memory footprint which might also be exploited to improve machine throughput. When training data is split into small batches each batch is jargoned as a minibatch.

So batch size number of iterations epoch. Batch size 32 is a good default value. In all cases the best results have been obtained with batch sizes of 32 or smaller.

Modern deep neural network training is typically based on mini batch stochastic gradient optimization.

Training Batch Icon

Https Openreview Net Pdf Id B1yy1bxcz

Revisiting Small Batch Training For Deep Neural Networks Arxiv

Https Openreview Net Pdf Id B1yy1bxcz

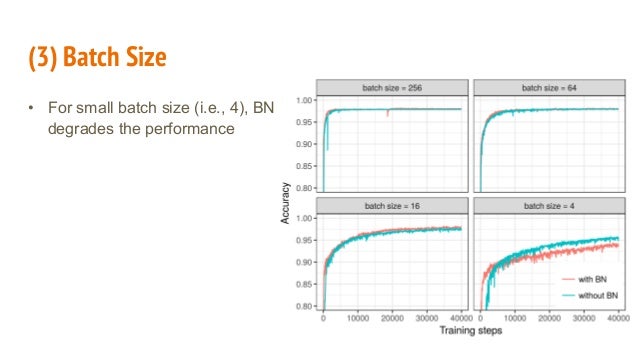

Why Batch Normalization Works So Well

Tip Reduce The Batch Size To Generalize Your Model Deep

Https Arxiv Org Pdf 1705 08741

Can Small Sgd Batch Size Lead To Faster Overfitting Cross Validated

How To Control The Stability Of Training Neural Networks With The